Hire LLM Developer

Your product only wins if it speaks your customer’s language flawlessly. Our LLM engineers step into your sprint on day one, pick up tickets without hand-holding, and ship pipelines that move from prototype to production in weeks—not quarters. They handle everything from model selection and fine-tuning with your proprietary data to RAG integration, prompt-layer versioning, red-team testing, and on-call monitoring, so you launch with confidence and scale without re-engineering.

What We Offer

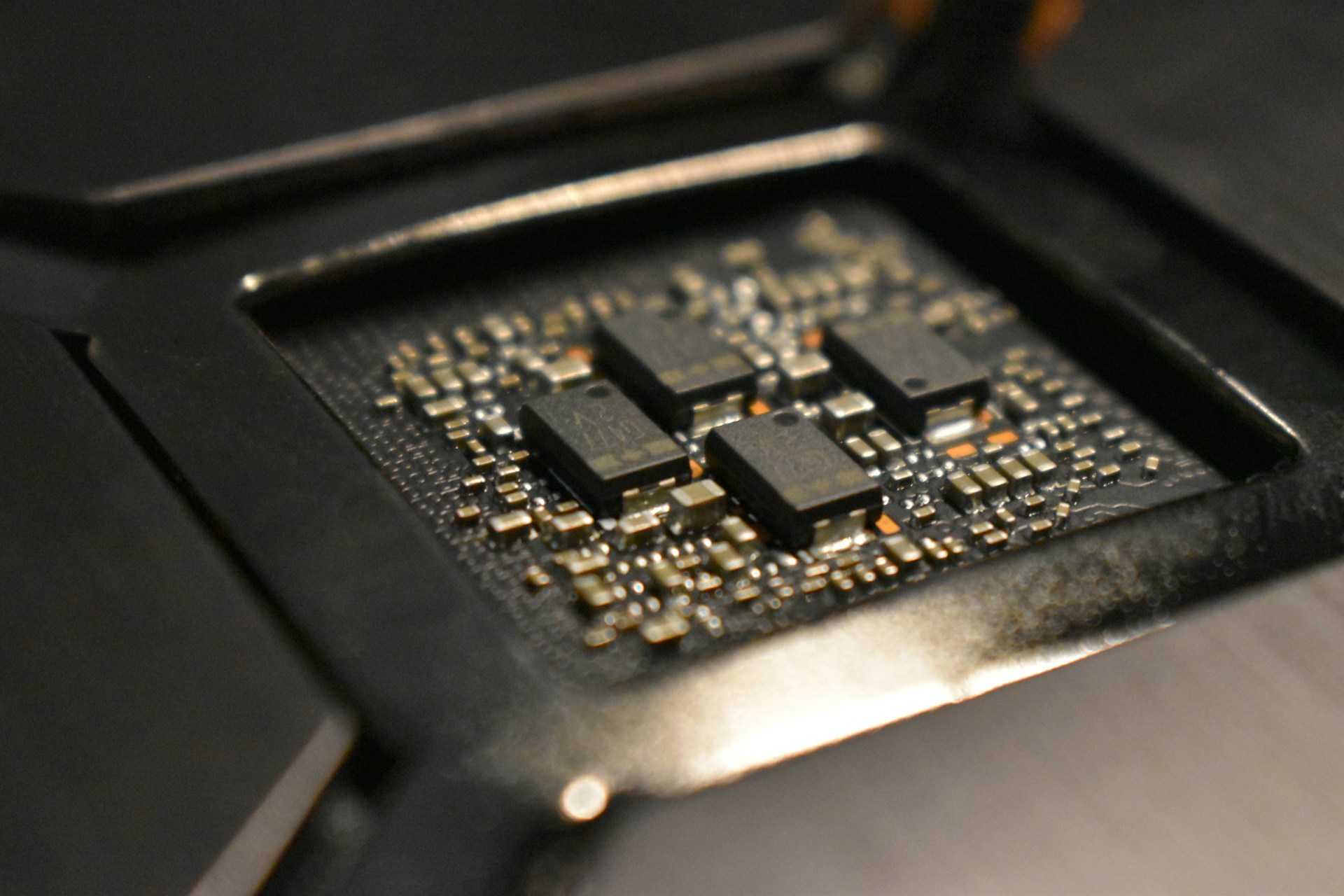

Architecture & Model Selection

We benchmark open-source and commercial models against your latency, cost, and privacy targets, then map out a scalable serving topology—GPU, CPU, or mixed—that your cloud budget can stomach.

Data Engineering & Fine-Tuning

From data contracts to vector store schema, we clean, label, and chunk domain material, then run low-rank adaptation (LoRA/QLoRA) and full-parameter fine-tuning where gains justify the spend. Every experiment lands in a lineage-tracked registry, so reproducibility never slips.

Retrieval-Augmented Generation (RAG) Pipelines

We design hybrid search that fuses dense and sparse retrieval, cache embeddings to slash query time, and stitch in guard-token re-ranking. The result: answers grounded in your source of truth, not the model’s imagination.

LLM Ops

Blue-green rollouts, canary prompts, GPU autoscaling, token-level logging—every release ships through an opinionated pipeline built for rollback in seconds, not hours. Real-time dashboards flag drift, latency spikes, and cost anomalies before finance does.

Conversational UX & Product Integration

We wire chat, voice, and agentic workflows into web, mobile, and backend services. Frontends stay snappy with streaming tokens; backends sync through event buses, so your product team iterates without rewriting business logic.

How an LLM Developer Pushes Your Business Forward

A dedicated LLM engineer closes the gap between bold product ideas and stable, ship-ready code.

1

Faster Time-to-Market

Reusable scaffolds for data prep, fine-tuning, and CI/CD shave weeks off the first MVP release and keep feature velocity high after launch.

2

Lower Inference Cost Curve

We tune context windows, quantize weights, and cache embeddings to squeeze each token, cutting monthly bills without degrading quality.

3

Higher Answer Accuracy

Domain-specific evaluation suites catch hallucinations before users do, while continuous feedback loops retrain the model on real traffic to lift hit rates.

4

Continuous Model Health

Latency, drift, and abuse signals stream into Grafana and PagerDuty, so ops teams act on minutes-old metrics instead of postmortems.

5

Tighter Security Posture

Red-team prompt banks and policy transformers block data leaks, jailbreaks, and toxic output—keeping auditors satisfied and users protected.

6

Better Product-Team Synergy

Our developers work inside your agile rituals, demoing each sprint, writing clear docs, and handing off patterns your engineers can reuse instead of reinvent.

Challenges We Clear Out of Your Way

LLM initiatives fail for predictable reasons. We cut through the six most common roadblocks.

Hit a similar snag? Let’s unblock it fast.

Why Team Up With Us

Great model work only sticks when the crew behind it covers every angle—code, data, ops, and product. Here’s how we outpace niche shops and off-the-shelf vendors.

1

Cross-Functional DNA

Our squads pair NLP scientists with backend, DevOps, and UX engineers in the same stand-ups, so decisions on embeddings, infra, and interface happen in one loop—not three hand-offs.

2

Proven Playbooks, Not Guesswork

We’ve shipped LLM features for fintech, health, and SaaS products; each new build starts from hardened templates for data contracts, prompt tests, and blue-green rollout—cutting risk even under tight deadlines.

3

Obsessed With Cost-Fit Engineering

GPU hours aren’t cheap. We benchmark quantization, pruning, and caching strategies until cost curves flatten, then bake alerts into your dashboards so spend never drifts silently upward.

4

Security Woven In, Not Bolted On

Token-level tracing, policy transformers, and encrypted vector stores ship from sprint zero. Auditors get the logs they need without slowing the release train.

5

Knowledge Transfer Built Into the Contract

Every pipeline, prompt, and dashboard lands in your repo with inline docs and recorded walkthroughs. Your team owns the keys, so you’re never hostage to a black-box vendor.

Cooperation Models

Our engagement choices flex to match your roadmap and in-house bandwidth.

We drop a fully cross-functional pod—model engineer, data lead, DevOps, and QA—into your Slack and sprint cadence. You set priorities; we own delivery. Perfect for green-field builds or aggressive feature rollouts.

Already have engineers but need deep LLM expertise? Tap one or two specialists for spikes in research, fine-tuning, or performance hardening. Billing by the sprint keeps spend predictable.

We architect, code, and scale the entire LLM platform, then shift ownership to your team through pair-programming, docs, and shadow rotations. You keep the IP, we exit clean.

Our Experts Team Up With Major Players

Partnering with forward-thinking companies, we deliver digital solutions that empower businesses to reach new heights.

Our Delivery Path

A disciplined, five-step loop lets us move fast without breaking things.

Scoping Workshop

In a single day we lock down user journeys, success metrics, data sources, and guardrail needs, then write a backlog sized for two sprints.

Data & Feasibility Audit

We profile every dataset, flag gaps, map privacy constraints, and benchmark candidate models so the next steps rest on hard numbers, not hunches.

Prototype in Two Sprints

A thin-slice demo with real data proves latency, cost, and answer quality. Stakeholders give feedback while the codebase is still nimble.

Harden & Deploy

We add safety filters, CI/CD, autoscaling, and observability, then push to staging and production behind feature flags and canary prompts.

Monitor & Iterate

Post-launch dashboards track drift, abuse, and spend. Weekly cadence reviews feed back into data labeling and prompt tuning, locking in continuous gains.

Client Successes

Explore our case studies to see how our solutions have empowered clients to achieve business results.

Hire LLM Developer FAQ

Contract signed on Monday, dev joins the first planning session by Friday.

Yes. We isolate PHI/PII in encrypted stores, apply role-based access at query time, and run all training inside your VPC.

Not necessarily. We start with small quantized models on spot instances, then scale only when traffic and accuracy gains justify it.

Both. We weigh latency, cost, licensing, and privacy, then pick the option with the best long-term fit for your use case.

Continuous evaluation pipelines score live traffic, surface drift, and trigger retraining jobs before users notice a dip in quality.