AI Governance Frameworks & Generative AI Oversight

Artificial intelligence is no longer an emerging technology reserved for research labs and early adopters. It now drives decision-making, powers customer experiences, and automates critical business processes across industries. But as AI becomes more embedded in daily operations, the stakes for using it responsibly have never been higher.

The challenge? AI is not just another software system. It learns, adapts, and, in the case of generative AI (GenAI), creates entirely new content. Without guardrails, these capabilities can lead to outcomes that are biased, inaccurate, or even harmful. And with global regulators moving quickly to establish new AI laws, enterprises face a dual responsibility: to innovate and to comply.

That’s where AI governance comes in. A well-designed governance framework gives organizations a roadmap to develop and deploy AI that is ethical, compliant, and aligned with business goals. In this guide, we’ll break down what AI governance means, how it differs for generative AI, and the concrete steps enterprises can take to implement it effectively.

What Is an AI Governance Framework?

At its core, an AI governance framework is a set of policies, standards, and processes that define how an organization builds, uses, and monitors AI systems. It is both a strategic tool (ensuring AI supports business objectives) and a compliance tool (reducing legal, ethical, and operational risks).

A comprehensive AI governance framework typically includes:

- Policy layer – Organization-wide rules for AI development and deployment, covering data acquisition, consent, storage, and retention; algorithm transparency; and decision-making authority.

- Standards layer – Reference to recognized guidelines such as the NIST AI Risk Management Framework, the ISO/IEC 42001 AI Management System Standard, and the OECD AI Principles.

- Oversight structures – Formal committees or roles (e.g., AI ethics officer, governance board) that review AI projects before launch, monitor them in production, and lead remediation if issues arise.

Why it matters:

Without governance, AI adoption can lead to unintended consequences — from discriminatory hiring algorithms to chatbots giving inaccurate legal or medical advice. A governance framework helps ensure AI is trustworthy, explainable, and fair.

AI Model Governance Explained

While an AI governance framework defines the overall approach, AI model governance focuses on individual models throughout their lifecycle. This includes:

- Development stage – Ensuring training data is lawful, representative, and free from harmful bias. Documenting data provenance and preprocessing steps.

- Testing & validation stage – Evaluating the model’s accuracy, robustness, and fairness using both statistical and domain-specific tests.

- Deployment stage – Monitoring model behavior in real-world conditions; implementing safeguards against drift, bias creep, or performance degradation.

- Maintenance stage – Scheduling retraining cycles, updating model documentation, and logging all changes for audit purposes.

- Retirement stage – Establishing clear criteria for when a model should be decommissioned or replaced.

Example:

A credit risk assessment model in a bank may pass internal accuracy tests but still fail a governance review if it disproportionately rejects applications from a protected demographic group. Model governance ensures that such risks are caught and mitigated early.

Why It’s Different

Generative AI is powerful precisely because it can create — text, images, audio, code — on demand. But that creative freedom comes with risks traditional AI governance often doesn’t address.

Key differences:

- Unpredictable outputs – Large language models can produce factually incorrect information (hallucinations), even when trained on high-quality data.

- Prompt manipulation risks – Adversarial prompts or “prompt injection” can bypass safety filters and cause models to generate harmful or confidential content.

- Copyright & IP concerns – Some GenAI models are trained on copyrighted material, raising legal questions about derivative works.

- Ethical misuse potential – Deepfakes, misinformation campaigns, and synthetic identities can be created at scale.

Because of these factors, GenAI governance requires additional controls, including:

- Output review mechanisms – Automated content scanning for compliance, toxicity, and bias.

- Usage monitoring – Tracking how models are being prompted and by whom.

- Access management – Restricting use of certain model capabilities to approved users or contexts.

Core Principles of GenAI Governance

For organizations adopting generative AI, the governance framework should be built around four principles:

Trustworthiness

Users should have confidence in the reliability and safety of GenAI outputs. This means setting clear performance expectations, publishing model limitations, and regularly validating outputs.

Transparency

Explain how outputs are generated, what datasets were used for training, and what safeguards are in place. Transparency is critical both for user trust and regulatory compliance.

Accountability

Every GenAI project should have a named owner responsible for overseeing the model’s lifecycle, reviewing incidents, and ensuring policy adherence.

Adaptability

GenAI evolves quickly. Governance policies must be revisited and updated to address emerging threats, model updates, or changes in law.

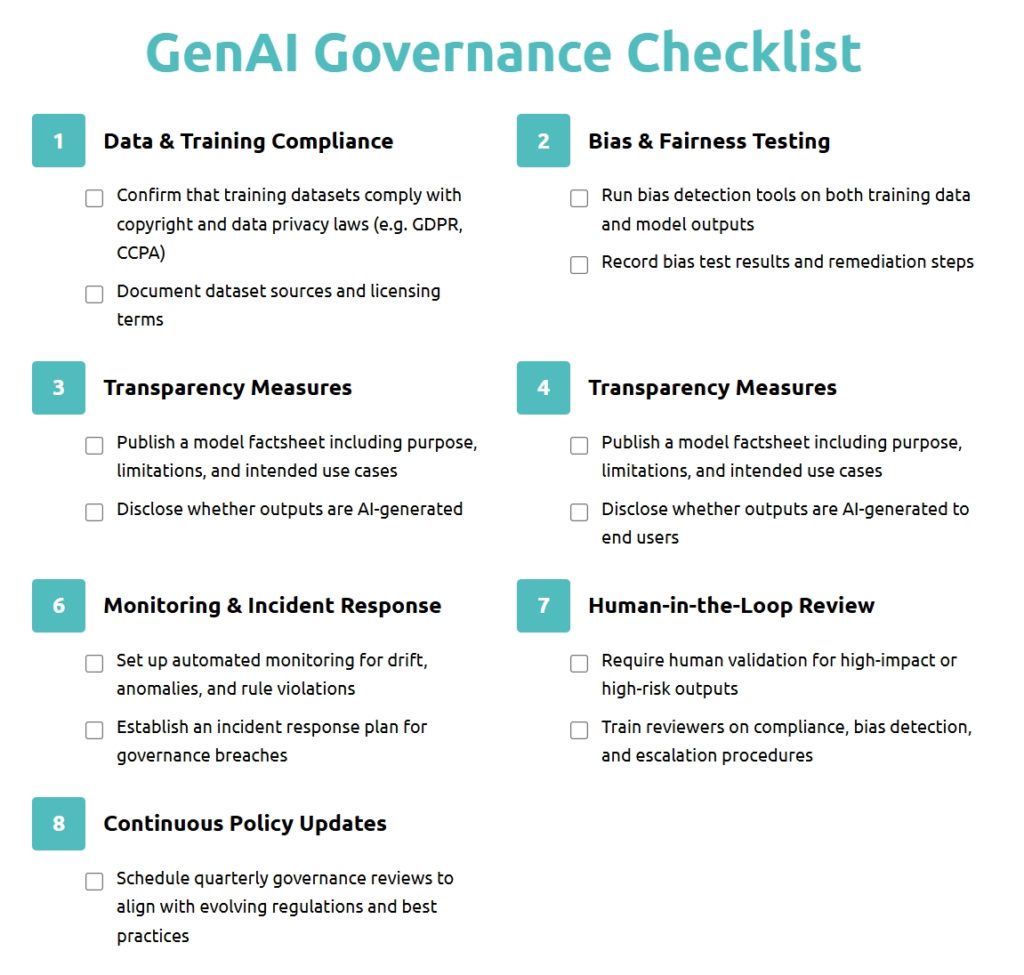

A GenAI governance checklist can simplify implementation — covering risk assessment, compliance checks, human-in-the-loop validation, and output logging.

Global AI Governance Standards & Regulations

AI regulation is not uniform. While some regions rely on voluntary guidelines, others are enacting binding laws.

Examples of leading frameworks:

| Standard / Regulation | Scope | Current Status |

|---|---|---|

| NIST AI RMF (US) | Risk-based approach for AI trustworthiness | Voluntary; widely adopted |

| EU AI Act | Categorizes AI by risk level with compliance requirements | Passed in 2024; phased rollout begins 2025 |

| ISO/IEC 42001 | AI management system standard | Published in 2023 |

| OECD AI Principles | Ethical and governance principles | Adopted by 46+ countries |

Enterprise tip:

If your business operates globally, align your internal governance policies to the strictest applicable regulation. This reduces compliance complexity and future-proofing efforts.

Building an Enterprise AI Governance Program

An effective governance program is built in five phases:

- Assess – Catalog all AI systems in use, evaluate current oversight measures, and identify gaps.

- Design – Define governance policies tailored to your industry, regulatory obligations, and business objectives.

- Implement – Assign governance roles, deploy monitoring tools, and integrate oversight into development pipelines.

- Monitor – Continuously check outputs, performance, and risk indicators.

- Adapt – Update processes and controls as models evolve and laws change.

Practical example:

A healthcare provider integrating an AI-powered diagnostic tool might first assess whether its data meets HIPAA standards, then design governance rules for model usage, implement monitoring for diagnostic accuracy, and schedule quarterly reviews to adjust policies as regulations evolve.

Tools & Technologies for Governance

The right tools can make AI governance scalable and efficient.

| Tool Type | Example Vendors | Primary Function |

|---|---|---|

| Bias detection & mitigation | Fiddler AI, Arthur AI | Identify and correct bias in datasets and outputs |

| Model monitoring | WhyLabs, Evidently AI | Detect drift, anomalies, and performance degradation |

| Output auditing | Lakera, Guardrails AI | Automatically scan and flag non-compliant or harmful outputs |

Selection criteria:

Choose tools that align with your AI architecture, risk tolerance, and compliance requirements. Open-source options may suit smaller teams, while enterprises often need integrated, enterprise-grade platforms.

KPIs & Continuous Improvement

Governance is never “set it and forget it.” Track progress with measurable KPIs:

- Compliance rate – Share of AI projects passing governance checks.

- Audit pass rate – Internal and third-party audit success rate.

- Incident frequency – Number of governance-related issues detected.

- Time-to-remediation – How quickly risks are addressed after detection.

Regular reviews of these metrics help identify where governance is strong and where additional resources are needed.

Future of AI & GenAI Governance

The future of AI governance will likely involve:

- Self-auditing AI systems that flag compliance issues automatically.

- Automated regulation mapping to adjust policies for different regions.

- Cross-border interoperability to support multinational AI development.

As AI models become more autonomous, governance will shift from reactive oversight to proactive, AI-driven risk management.

Final Thoughts

AI governance is no longer a “nice-to-have” — it’s a business necessity. For traditional AI, it ensures models are reliable, ethical, and compliant. For generative AI, it also protects against unpredictable outputs and new forms of misuse.

By building a governance program grounded in global standards, backed by the right tools, and reinforced with measurable KPIs, enterprises can innovate with confidence while avoiding costly legal or reputational damage.

FAQs on GenAI Governance

You can start by extending existing risk, security, and data-science teams, but mature programs benefit from a cross-functional GenAI working group. A small core (legal, CISO office, data science lead, product owner) meets regularly, owns the governance roadmap, and reports up to the board.

Set two cadences: automated monitors run continuously, flagging drift, bias spikes, or abnormal prompt patterns; formal governance reviews occur quarterly or after any major change in data, model weights, or regulation.

Expect requests for model and system cards, data-lineage logs, eval results, incident records, and proof of human oversight. If your pipeline captures these artifacts automatically and stores them with tamper-evident controls, an audit becomes a document-export exercise rather than a scramble.

Open-source libraries cover testing, watermarking, and red-teaming basics, but many firms layer commercial platforms on top for access control, enterprise support, and integration with ticketing systems. Evaluate open and paid tools the same way you would any security stack: by fit, extensibility, and total cost of ownership.

Wrap third-party models in the same controls you apply to internal services: rate limiting, prompt filtering, output logging, and independent bias tests. Contract clauses should mandate timely disclosure of training data changes and security incidents, giving your generative AI governance program visibility even when you don’t control the underlying weights.

Move past pilot once the model meets performance targets, all checklist items are closed, and ownership for ongoing monitoring is documented. A go-live memo locks in accountability and makes sure every stakeholder accepts the residual risk before public launch.