Hire ChatGPT Developer

Our engineers build, fine-tune, and deploy ChatGPT solutions that slot into your stack without rewiring it. We map your workflows, pick the right model size, and train it on your domain data so responses sound like your brand and stay on-topic. From zero-shot prototypes to fully governed production systems, we handle prompt engineering, retrieval-augmented generation, latency tuning, PII redaction, and continuous evaluation. You get an AI teammate that answers customers, drafts content, and drives analytics—all guarded by role-based access, audit trails, and cost controls you can track in real time. Ready to launch a chatbot that speaks your language and respects your compliance rules? Let’s architect it together.

What We Offer

Architecture & Model Selection

We benchmark GPT-4o, Azure OpenAI, and open-source Llama-3 variants against your latency, cost, and privacy constraints, then design an API layer that hides vendor quirks and scales out on Kubernetes or serverless functions without lock-in.

Prompt Engineering & Evaluation

Our team codes prompt libraries that adapt on the fly to user intent, inject real-time context, and resist prompt injection. Every iteration ships with automatic regression tests that score accuracy, tone, and toxicity across thousands of edge-case scenarios.

Retrieval-Augmented Generation (RAG)

We stitch vector stores, hybrid search, and graph queries into a memory layer that cites sources instead of hallucinating. Chunking rules, embeddings choice, and caching strategies are tuned for your document mix—be it policy PDFs, SQL schemas, or Jira tickets.

Fine-Tuning & Domain Adaptation

Using Low-Rank Adaptation (LoRA) and parameter-efficient techniques, we teach the model sector-specific jargon without inflating token costs. Training pipelines run on spot GPUs with automated hyper-parameter sweeps and checkpoint fallback for fault tolerance.

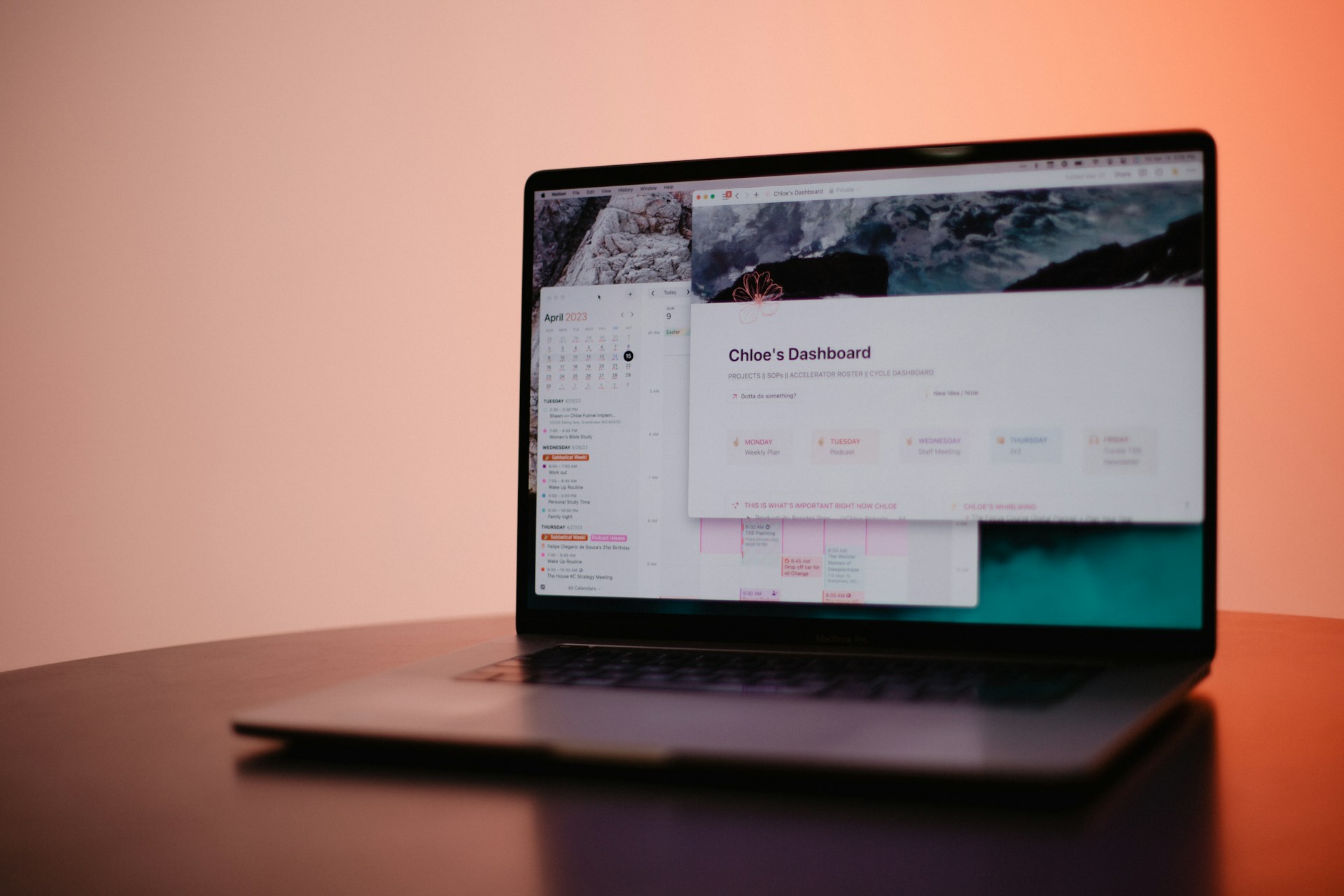

Monitoring & Cost Optimization

Live dashboards track token spend, latency, satisfaction scores, and drift. Usage spikes trigger autoscaling rules, while under-used endpoints down-shift to cheaper models so your finance team sees predictable AI OPEX.

How Hiring a ChatGPT Developer Benefits Your Business

Bringing a specialist on board turns conversational AI from a cool demo into a measurable growth engine.

1

Faster Customer Support Resolution

We fuse your ticket history with a retrieval pipeline that serves precise answers in seconds and flags edge cases for human review, cutting average handle time without sacrificing accuracy.

2

Lower Content Production Costs

The same model drafts knowledge-base articles, product descriptions, and internal briefs while preserving brand voice. Your writers shift from blank pages to high-value editing, freeing budget for campaigns that move the needle.

3

Revenue Lift Through Intelligent Upsell

Context-aware prompts surface add-ons, warranties, or premium tiers at the moment of purchase. Conversion logs feed back into the model, sharpening its cross-sell timing with every chat.

4

Deeper User Insights From Conversation Logs

We tag intents, sentiments, and objections in real time, then route that data into your BI platform. Marketing, product, and CX teams finally see what customers really want—no more guessing.

5

Reduced Compliance Risk Via Governed Responses

Built-in PII redaction, policy checks, and refusal flows keep every interaction inside your legal guardrails. Role-based throttling and audit trails satisfy auditors without slowing delivery.

6

Future-Proof Architecture That Scales With Model Upgrades

Our abstraction layer lets you swap models or vendors as prices drop and capabilities grow, so you never get stuck with yesterday’s AI.

Challenges We Commonly Solve

A promising ChatGPT proof-of-concept often stalls when it meets the complexity of real production traffic, legacy data, and strict governance rules. Here are the bottlenecks we remove:

Ready to remove these blockers and launch at scale?

Why Choose WiserBrand

You’re not just hiring coders—you’re plugging into a battle-tested AI delivery unit that ships governed, business-ready ChatGPT solutions at startup speed.

1

Proven LLM Launches Across Regulated Sectors

From healthcare triage bots to banking knowledge bases, we’ve moved ChatGPT from prototype to production while passing security audits on the first sweep.

2

Cross-Functional Squads on Day One

Each engagement spins up a blend of prompt engineers, full-stack devs, MLOps specialists, and UX writers—so discovery, build, and rollout run in the same sprint cadence.

3

Security-First Engineering DNA

PII redaction at token level, signed JWTs on every request, and end-to-end encryption are baked into our starter repo. Your infosec team sees the threat model before we write a single line of code.

4

Transparent Pricing and Live Cost Dashboards

We hook real-time token metering into Grafana, letting finance track spend per feature flag. No surprises, no black boxes.

5

Vendor-Agnostic Architecture

Our abstraction layer means you can pivot between OpenAI, Anthropic, or on-premise models with a single config change—keeping leverage on pricing and data residency.

6

Continuous Improvement Loop

Nightly evaluation pipelines grade new chat logs, trigger micro-fine-tunes, and push better prompts by sunrise. Your bot grows smarter while you sleep.

Cooperation Models

Pick the engagement style that aligns with your roadmap and budget.

A self-sufficient pod—prompt engineer, full-stack dev, and MLOps lead—joins your sprint rhythm, ships code under your repo, and syncs daily with your product owner. Velocity climbs without disrupting culture.

Add one or two ChatGPT specialists to your crew for a defined stretch. We handle recruitment, vetting, and onboarding; you direct the backlog. When the milestone ships, they roll off with zero handover drag.

Hand us a spec and a deadline, and we return a production-ready chatbot with docs, training sessions, and a runway of post-launch support hours. Scope, price, and timeline stay locked from day one.

Our Experts Team Up With Major Players

Partnering with forward-thinking companies, we deliver digital solutions that empower businesses to reach new heights.

Our Approach

We treat conversational AI like any other critical production service: measured, version-controlled, and continually improved.

Discovery & Data Audit

We inventory every knowledge source—CRM notes, ticket threads, policy PDFs—and map gaps that would cause hallucinations. The audit delivers a ranked backlog of must-index content and a privacy footprint you can share with legal.

Rapid Prototype & Prompt Design

Within the first sprint we stand up a sandbox endpoint, craft system and user prompts, and run A/B tests against real chat transcripts. Early metrics on accuracy, latency, and cost guide the architecture before heavy lifting begins.

Retrieval Layer & Fine-Tuning

We build the vector index, wire in hybrid search for structured data, and apply parameter-efficient fine-tunes so the model speaks your jargon without ballooning token counts. Guardrails for PII redaction and policy refusals go in at this stage.

Hardening & Integration

The service graduates to your staging stack behind OAuth, rate limits, and SLIs. We add observability hooks—token spend, satisfaction scores, drift alerts—and pipe them into your monitoring suite alongside existing microservices.

Launch, Evaluate, Improve

Go-live includes load testing, on-call playbooks, and a rollback path. Nightly evaluation jobs grade new conversations and auto-tune prompts, so response quality climbs while costs stay predictable.

Client Successes

Explore our case studies to see how our solutions have empowered clients to achieve business results.

Hire ChatGPT Developer FAQ

A proof-of-concept usually lands in two weeks. Production rollout—with guardrails, retrieval layer, and dashboards—averages six to eight.

Yes. We deploy the retrieval index and all logs inside your VPC, route only sanitized prompts to the model API, or run an on-premise LLM if policy demands zero external calls.

Real-time metering tags every request by feature flag. Autoscaling rules downgrade low-priority calls to lighter models and cache frequent answers, so finance sees flat curves, not spikes.

If your domain language is unique or compliance wording must be verbatim, we fine-tune. If the knowledge base changes weekly, RAG wins. Many clients use a hybrid: light fine-tune plus dynamic retrieval.

GPT-4o supports over 50 languages out of the box. We layer language-specific prompts, vector indexes, and fallback rules so users get native-level replies without cross-talk.